Davinsy, our autonomous machine learning system, integrates at its core Deeplomath, a deep learning kernel continuously learning from real-time incoming data. This paper uses the main characteristics of Deeplomath to emphasis on how to reduce ML models drift (https://www.ibm.com/cloud/watson-studio/drift) thanks to continuous learning from minimalist event-driven built datasets. To be precise, one should call this the drift of model predictions with respect to data.

Model drift and data bias

It is well known that any dataset contains error. Especially, if the database is built by human, through crowdsourcing or so forth. From mathematical point of view, the variance of the data decreases with the size of the dataset. There are several types of bias ML needs to face:

- https://en.wikipedia.org/wiki/Bias%E2%80%93variance_tradeoff

- https://www.telusinternational.com/articles/7-types-of-data-bias-in-machine-learning

- https://blog.taus.net/9-types-of-data-bias-in-machine-learning

One expects also the bias to decrease with the dataset size even this is not always observed in practice:

Bias all around and of all kinds

A list of common types of data bias in ML is: selection bias, outliers, measurement bias, recall bias, observer bias, exclusion bias, racial bias, association bias and their overfitting and underfitting consequences on models. Most of these are related to data collection and preparation. Overfitting and Underfitting are especially important. When a model gets trained with large amounts of data, it also learns from inaccurate data entries and noise. This will lead to false prediction referred to as overfitting. Underfitting, is on the other hand when a machine learning model cannot capture the underlying trend of the data which is commonly observed when there is not enough data with respect to the model size. This leads to a model with high bias.

Bias is the rule not exception

One important situation which rules industrial applications is that error in learning data can appear, even if initially absent, as a given system functioning and environment can change over time. This can be because the system ages or simply because its noisy environment has changed. This means that error in data should be thought of not as an exception but as the rule.

AI vicious circle

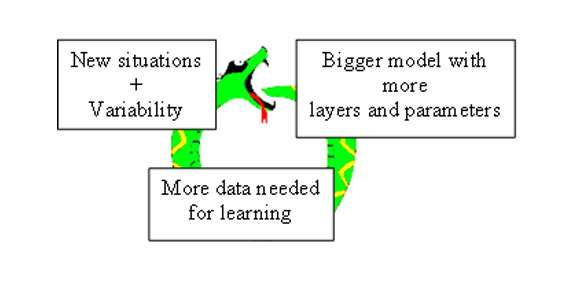

Having said that, it is well known that ML models, and especially Deep ML, require larger and larger static datasets to avoid overfitting with the size of model increasing. It is therefore clear that we have a vicious circle: larger models requiring larger datasets which in turn require larger models for increased accuracy. It is also known that the accuracy of a ML is bound by the accuracy of the underlying dataset.

AI drift is systemic

Coming back to our noisy environment, such a change makes that a ML model built on a static dataset will necessarily drift and this is regardless of how large the dataset is, especially when the input dimension is large.

Mathematics are the best guide to make sure things do not go south.

Therefore, to understand why the triple curses of dimensionality (space dimension, network size, database size), makes static AI training inefficient, always think of:

- how evolves 2n,

- that Lp/Linfty balls volumes ratio tends to 0 with dimension n: for p=2 and n=20, Lp/Linfty<10-7 and n=20 is not big,

- that when dimension is large, a ball is not a ball, but looks like this making sampling hazardous as distance functions are not discriminatory anymore: all the points tend to be at the same distance of each other.

Continuous learning is the only cure

The only way to keep ML models not drifting is to continuously adapt them to the current environment. When the environment is changing fast, this needs to be done with nearly zero latency which is clearly incompatible with large models and training requiring large remote resources. Therefore, user interventions or iterative procedures are off the table.

DavinSy permits to avoid this whole problem through continuous event-based few-shot learning at the endpoint by a real-time automatic reconstruction of the Deeplomath model. These allow continuous adaptation of the model in noisy environment thus ensuring model drift with respect to the data cannot happen. Being a few-shot learning solution, DavinSy embeds a ‘support’ labelled dataset of raw data to adapt to additive noises. If noises change, Bondzai rebuilds its network for the noise-augmented dataset.

Davinsy provides model behavior monitoring and metric tools tuning the right pace for its continuous event-driven learning making sure model degradation is avoided.

Event-driven continuous learning compartmentalizes biases

An obvious consequence of event-driven learning dataset construction is that bias in data will necessarily come from failures in the local acquisition device and therefore it cannot propagate. On the other hand, if a static dataset is biased, every model built on top of this set will be contaminated. As a consequence, event-based continuous learning not only avoids model drift, but it also reduces data bias, especially those related to the human factor mentioned above.