PRODUCTS

DaVinSy Voice

DAVINSY VOICE VOICE-SOUND AIoT CONTROL SYSTEM

DavinSy Voice is an instantiation of DavinSy system for voice activated front-end systems. The voice application is based on “speech to intent” principle and packaged as a cross-platform library. Implementations are available on different host platforms such ST Microelectronics IoTNode, Sensortile evaluation boards and Raspberry Pi3B+.

DavinSy Voice proposes several specific audio Virtual Models:

- Voice Activity Detection: Distinguishes voice from background noise,

- Speaker Identification: Differentiates the voice of users,

- Wake Word Detection: Waits for a specific keyword to trigger actions,

- Command Recognition: Associates voice commands to intents,

- Acoustic Scene Classification: Identifies audio environments (street, restaurant, office…).

Each Virtual Model can be fine-tuned for specific needs.

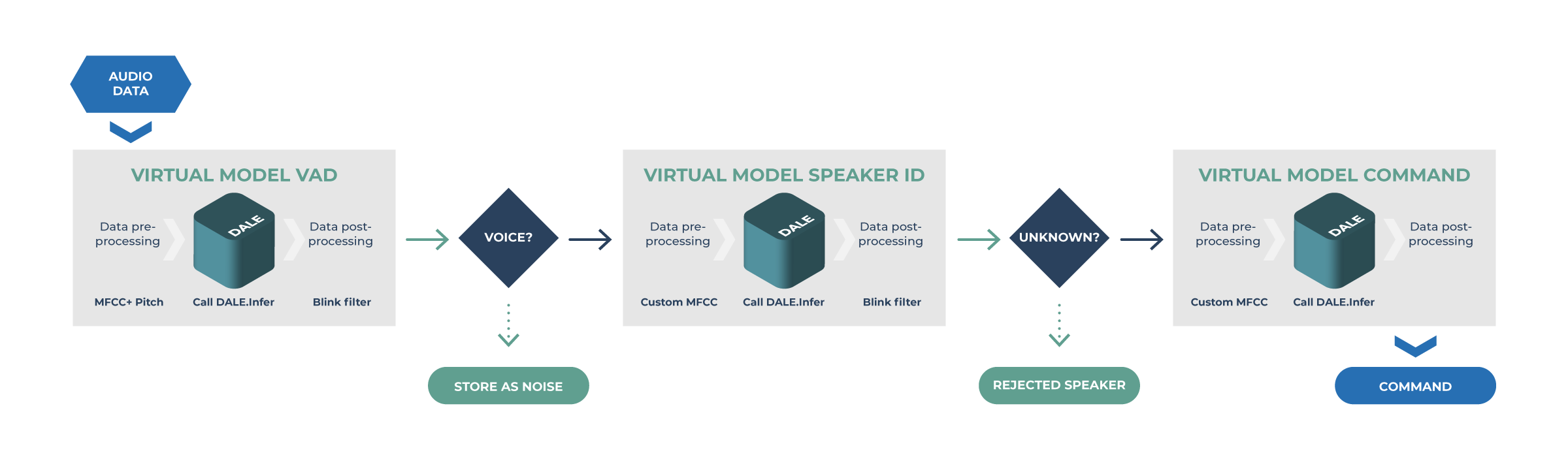

For instance, the picture below shows how to define three audio Virtual Models for a “secured voice command activation” application in the Application Service Layers of DavinSy Voice:

Command Voice Activation VIRTUAL MODELS

VIRTUAL MODEL: Voice Activity Detection

Type: audio

Mode: Classification

Rejection: None

Preprocess:

- 16 acoustic parameters

Input Size: 16

Output Size: 2 (Voice/Noise)

Resource: maxRAM: 20kBytes

Postprocess:

- Anti-flickering on 6 frames

VIRTUAL MODEL: Speaker Identification

Type: audio

Mode: Classification

Rejection: Medium

Preprocess:

- 140 acoustic parameters

- Time data augmentation

- Noise data augmentation

Input Size: 140

Output Size: 6 (5 speakers + impostor)

Resource: maxRAM: 40kBytes

Postprocess:

- Anti-flickering on 4 frames

VIRTUAL MODEL: Command Recognition

Type: audio

Mode: Classification

Rejection: High

Preprocess:

- 140 acoustic parameters

- Time data augmentation

- Noise data augmentation

Input Size: 140

Output Size: 11 (10 different commands)

Resource: maxRAM: 40kBytes

Postprocess:

- None

Example of application sequence representation:

Taking into consideration application requirements, ASL interprets redundant operations to optimize computation and storage.

Benchmarking Command Recognition Rate (CAR) against cloud-based learning AI competitors shows DavinSy superiority:

| System | CAR in quiet env. | CAR in noisy env. (Signal-Noise ratio at 6 dB) |

| Davinsy Voice, any language No Cloud, complete embedded AI |

96% | 91% |

| Pico Voice Rhino, single language * Cloud Learning, embedded inference |

98% | 93% |

|

Amazon Lex, multi-language * |

87% | 75% |

*Courtesy Pico Voice benchmarks: Benchmark to compare Rhino and Amazon Lex NLU | Picovoice Docs

Comparison between DavinSy Voice and competitors on multi-modal voice features.

DavinSy voice is the only all-in-one voice embedded frontend.

| System | Command Speech2intent | Voice Activity Detection | Speaker Identification | Wake-word | Acoustic Scene Classification |

| Davinsy Voice | Yes | Yes | Yes** | Yes | Yes |

| Pico Voice | Yes, but need Rhino | Yes, but need Cobra | No | Yes, but need Porcupine | No |

| Sensory | Yes, but need TrulyHandsfree Voice control | No | Yes, but need TrulySecured Speaker Verification | Yes, but need TrulyHandsfree Voice control | Yes, but need SoundID |

| Fluant.ai | Yes, but need AIR | No | No | Yes, but need Wakeword | No |

** Speaker identification:

Works from 1s voice sample in text-dependent mode (like coupled with commands).

Works from 3s voice sample in text-independent mode.

Learning on target means we know how to leverage real-time ground truth to improve model accuracy. In the case of DavinSy Voice, this return is immediate thanks to the main property of DALE, e.g., its ability to handle real live data on the device and to build a model in a few seconds. Thus, by this short latency, it is possible in the operating cycle of the equipment to take the decision to correct in the event of misalignment of the results and to rebuild the model in the same cycle. Therefore, DavinSy Voice permanently mitigates model drift.

For example, imagine that you get sick, and your voice changes. As a consequence, your voice secured device will start rejecting your commands because it fails to identify you. With a classical cloud dependent solution, you would be stuck, but with DavinSy you have the solution. Just tell that the rejected record was your voice. DavinSy will, thus, regenerate a brand new model accouting for these new data, in a few seconds, and will accept your orders again.

As main benefits compared to alternative cloud-based training solutions one can cite:

- DavinSy Voice learns and adapts to any language, accent, or lingo, while maintaining privacy and facilitating the deployment of the same product everywhere in the world.

- DavinSy Voice instantly adapts to background noise variations,

- thanks to virtual models, DavinSy Voice extends the durability of the model to the lifetime of the product, simplifying maintenance,

- the unique hyper-miniaturization footprint and collaborative AI enables Davinsy Voice to be distributed over Edge devices and beyond such smart powerplug. Ex : smart home where DavinSy Voice can be distributed to multiple low costs voice capturing devices (e.g. powerplug) which will communicate to localize and recognize the speaker ID by improving accuracy in different and difficult Acoustic environments,

- DavinSy Voice is secured by voice biometric identification of the speaker, a personalized keyword and a local database,

- DavinSy Voice provides natural interactive and transparent reinforcement learning through fast learning and minimal inference latency.

Finally, as any DavinSy based product DavinSy Voice offers native device management and security features.

DavinSy Voice CortexM4 demonstration:

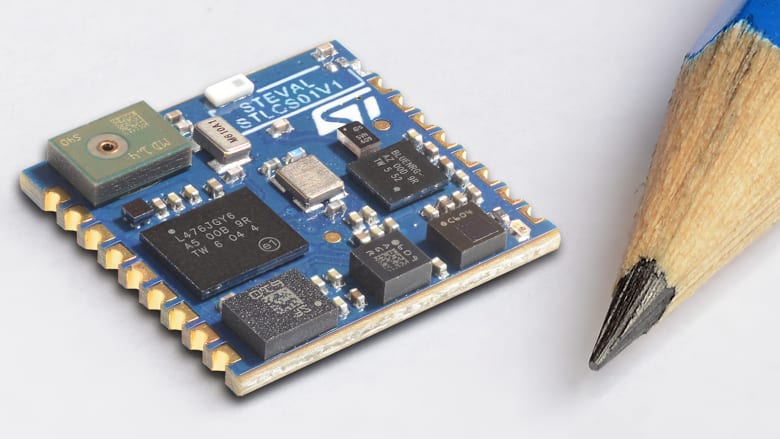

- Evaluated on STM32L4 (CortexM4 80MHz, 128Kbytes RAM) boards IoTNode and SensorTile

- Virtual Models: Voice activity detection and Speaker Identification (up to 5 different speakers)

- Memory footprint: 70 kBytes RAM, 256kBytes Flash

- Training time in less than 5 seconds (executed in background)

- Inference time (VAD) in less than 30 ms, Speaker Identification in less than 100ms

DavinSy Voice Raspberry Pi 3B demonstration:

- Evaluated on Raspberry Pi 3B+ (CortexA53 4HGzHz, 1Gbytes RAM) board

- Virtual Models: Voice activity detection, Speaker Identification (up to 10 different speakers), Command recognition (up to 10 commands by speaker).

- Memory footprint: 512 kBytes RAM, 256kBytes Flash

- Training time in less than 3 seconds (executed in background)

- Inference time (VAD) in less than 10 ms, Speaker Identification in less than 30ms, Command Identification in less than 50ms

Davinsy Voice implementation features

Any Language

As DavinSy Voice learns from user’s voice live on target, it can handle any language seamlessly. Additionally, it will adapt to accents or variation in the voice continuously.

Enrolled Wake Word

After a few recordings, the system will be able to recognize the enrolled wake word and trigger subsequent actions.

Robust to noise

DavinSy enrich its database by doing live data augmentation from captured noise.

The resulting model will be able to recognize commands in harsh noise conditions.

Performances on par

Performances of DavinSy voice are on par with competition ranging from 95% of commands recognized in silent conditions to 85% at 6db SNR.

Performances on par

Performances of DavinSy voice are on par with competition ranging from 95% of commands recognized in silent conditions to 85% at 6db SNR.

Ubiquitous

DavinSy Voice devices can detect each other on a local network creating a ubiquitous grid of microphones. Such devices can take decisions based on the localization of the user or mitigate effects of reverberation.

Secure

DavinSy Voice authenticates user’s voice and rejects unknown users.

DavinSy makes sure all your communications are ciphered end-to-end and the data are stored in secure zones.

Personalized

It is always possible for users of DavinSy Voice devices to personalize commands or behavior of the system.

No data drift

Davinsy takes user’s feedback into account to improve its performance. Thus, it will be able to adapt to user’s voice variation, environment modifications, or better reject some specific words.

Offline

DavinSy is standalone. It does not rely on any server. It makes it both reliable and reactive.

Meet Bondzai